March 2011

Monthly Archive

March 31, 2011

One talk at the workshop was nominally a school talk by Laurent Freidel, but it’s interesting and distinctive enough in its own right that I wanted to consider it by itself. It was based on this paper on the “Principle of Relative Locality”. This isn’t so much a new theory, as an exposition of what ought to happen when one looks at a particular limit of any putative theory that has both quantum field theory and gravity as (different) limits of it. This leads through some ideas, such as curved momentum space, which have been kicking around for a while. The end result is a way of accounting for apparently non-local interactions of particles, by saying that while the particles themselves “see” the interactions as local, distant observers might not.

Whereas Einstein’s gravity describes a regime where Newton’s gravitational constant  is important but Planck’s constant

is important but Planck’s constant  is negligible, and (special-relativistic) quantum field theory assumes

is negligible, and (special-relativistic) quantum field theory assumes  significant but

significant but  not. Both of these assume there is a special velocity scale, given by the speed of light

not. Both of these assume there is a special velocity scale, given by the speed of light  , whereas classical mechanics assumes that all three can be neglected (i.e.

, whereas classical mechanics assumes that all three can be neglected (i.e.  and

and  are zero, and

are zero, and  is infinite). The guiding assumption is that these are all approximations to some more fundamental theory, called “quantum gravity” just because it accepts that both

is infinite). The guiding assumption is that these are all approximations to some more fundamental theory, called “quantum gravity” just because it accepts that both  and

and  (as well as

(as well as  ) are significant in calculating physical effects. So GR and QFT incorporate two of the three constants each, and classical mechanics incorporates neither. The “principle of relative locality” arises when we consider a slightly different approximation to this underlying theory.

) are significant in calculating physical effects. So GR and QFT incorporate two of the three constants each, and classical mechanics incorporates neither. The “principle of relative locality” arises when we consider a slightly different approximation to this underlying theory.

This approximation works with a regime where  and

and  are each negligible, but the ratio is not – this being related to the Planck mass

are each negligible, but the ratio is not – this being related to the Planck mass  . The point is that this is an approximation with no special length scale (“Planck length”), but instead a special energy scale (“Planck mass”) which has to be preserved. Since energy and momentum are different parts of a single 4-vector, this is also a momentum scale; we expect to see some kind of deformation of momentum space, at least for momenta that are bigger than this scale. The existence of this scale turns out to mean that momenta don’t add linearly – at least, not unless they’re very small compared to the Planck scale.

. The point is that this is an approximation with no special length scale (“Planck length”), but instead a special energy scale (“Planck mass”) which has to be preserved. Since energy and momentum are different parts of a single 4-vector, this is also a momentum scale; we expect to see some kind of deformation of momentum space, at least for momenta that are bigger than this scale. The existence of this scale turns out to mean that momenta don’t add linearly – at least, not unless they’re very small compared to the Planck scale.

So what is “Relative Locality”? In the paper linked above, it’s stated like so:

Physics takes place in phase space and there is no invariant global projection that gives a description of processes in spacetime. From their measurements local observers can construct descriptions of particles moving and interacting in a spacetime, but different observers construct different spacetimes, which are observer-dependent slices of phase space.

Motivation

This arises from taking the basic insight of general relativity – the requirement that physical principles should be invariant under coordinate transformations (i.e. diffeomorphisms) – and extend it so that instead of applying just to spacetime, it applies to the whole of phase space. Phase space (which, in this limit where  , replaces the Hilbert space of a truly quantum theory) is the space of position-momentum configurations (of things small enough to treat as point-like, in a given fixed approximation). Having no

, replaces the Hilbert space of a truly quantum theory) is the space of position-momentum configurations (of things small enough to treat as point-like, in a given fixed approximation). Having no  means we don’t need to worry about any dynamical curvature of “spacetime” (which doesn’t exist), and having no Planck length means we can blithely treat phase space as a manifold with coordinates valued in the real line (which has no special scale). Yet, having a special mass/momentum scale says we should see some purely combined “quantum gravity” effects show up.

means we don’t need to worry about any dynamical curvature of “spacetime” (which doesn’t exist), and having no Planck length means we can blithely treat phase space as a manifold with coordinates valued in the real line (which has no special scale). Yet, having a special mass/momentum scale says we should see some purely combined “quantum gravity” effects show up.

The physical idea is that phase space is an accurate description of what we can see and measure locally. Observers (whom we assume small enough to be considered point-like) can measure their own proper time (they “have a clock”) and can detect momenta (by letting things collide with them and measuring the energy transferred locally and its direction). That is, we “see colors and angles” (i.e. photon energies and differences of direction). Beyond this, one shouldn’t impose any particular theory of what momenta do: we can observe the momenta of separate objects and see what results when they interact and deduce rules from that. As an extension of standard physics, this model is pretty conservative. Now, conventionally, phase space would be the cotangent bundle of spacetime  . This model is based on the assumption that objects can be at any point, and wherever they are, their space of possible momenta is a vector space. Being a bundle, with a global projection onto

. This model is based on the assumption that objects can be at any point, and wherever they are, their space of possible momenta is a vector space. Being a bundle, with a global projection onto  (taking

(taking  to

to  ), is exactly what this principle says doesn’t necessarily obtain. We still assume that phase space will be some symplectic manifold. But we don’t assume a priori that momentum coordinates give a projection whose fibres happen to be vector spaces, as in a cotangent bundle.

), is exactly what this principle says doesn’t necessarily obtain. We still assume that phase space will be some symplectic manifold. But we don’t assume a priori that momentum coordinates give a projection whose fibres happen to be vector spaces, as in a cotangent bundle.

Now, a symplectic manifold still looks locally like a cotangent bundle (Darboux’s theorem). So even if there is no universal “spacetime”, each observer can still locally construct a version of “spacetime” by slicing up phase space into position and momentum coordinates. One can, by brute force, extend the spacetime coordinates quite far, to distant points in phase space. This is roughly analogous to how, in special relativity, each observer can put their own coordinates on spacetime and arrive at different notions of simultaneity. In general relativity, there are issues with trying to extend this concept globally, but it can be done under some conditions, giving the idea of “space-like slices” of spacetime. In the same way, we can construct “spacetime-like slices” of phase space.

Geometrizing Algebra

Now, if phase space is a cotangent bundle, momenta can be added (the fibres of the bundle are vector spaces). Some more recent ideas about “quasi-Hamiltonian spaces” (initially introduced by Alekseev, Malkin and Meinrenken) conceive of momenta as “group-valued” – rather than taking values in the dual of some Lie algebra (the way, classically, momenta are dual to velocities, which live in the Lie algebra of infinitesimal translations). For small momenta, these are hard to distinguish, so even group-valued momenta might look linear, but the premise is that we ought to discover this by experiment, not assumption. We certainly can detect “zero momentum” and for physical reasons can say that given two things with two momenta  , there’s a way of combining them into a combined momentum

, there’s a way of combining them into a combined momentum  . Think of doing this physically – transfer all momentum from one particle to another, as seen by a given observer. Since the same momentum at the observer’s position can be either coming in or going out, this operation has a “negative” with

. Think of doing this physically – transfer all momentum from one particle to another, as seen by a given observer. Since the same momentum at the observer’s position can be either coming in or going out, this operation has a “negative” with  .

.

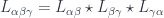

We do have a space of momenta at any given observer’s location – the total of all momenta that can be observed there, and this space now has some algebraic structure. But we have no reason to assume up front that  is either commutative or associative (let alone that it makes momentum space at a given observer’s location into a vector space). One can interpret this algebraic structure as giving some geometry. The commutator for

is either commutative or associative (let alone that it makes momentum space at a given observer’s location into a vector space). One can interpret this algebraic structure as giving some geometry. The commutator for  gives a metric on momentum space. This is a bilinear form which is implicitly defined by the “norm” that assigns a kinetic energy to a particle with a given momentum. The associator given by

gives a metric on momentum space. This is a bilinear form which is implicitly defined by the “norm” that assigns a kinetic energy to a particle with a given momentum. The associator given by  , infinitesimally near

, infinitesimally near  where this makes sense, gives a connection. This defines a “parallel transport” of a finite momentum

where this makes sense, gives a connection. This defines a “parallel transport” of a finite momentum  in the direction of a momentum

in the direction of a momentum  by saying infinitesimally what happens when adding

by saying infinitesimally what happens when adding  to

to  .

.

Various additional physical assumptions – like the momentum-space “duals” of the equivalence principle (that the combination of momenta works the same way for all kinds of matter regardless of charge), or the strong equivalence principle (that inertial mass and rest mass energy per the relation  are the same) and so forth can narrow down the geometry of this metric and connection. Typically we’ll find that it needs to be Lorentzian. With strong enough symmetry assumptions, it must be flat, so that momentum space is a vector space after all – but even with fairly strong assumptions, as with general relativity, there’s still room for this “empty space” to have some intrinsic curvature, in the form of a momentum-space “dual cosmological constant”, which can be positive (so momentum space is closed like a sphere), zero (the vector space case we usually assume) or negative (so momentum space is hyperbolic).

are the same) and so forth can narrow down the geometry of this metric and connection. Typically we’ll find that it needs to be Lorentzian. With strong enough symmetry assumptions, it must be flat, so that momentum space is a vector space after all – but even with fairly strong assumptions, as with general relativity, there’s still room for this “empty space” to have some intrinsic curvature, in the form of a momentum-space “dual cosmological constant”, which can be positive (so momentum space is closed like a sphere), zero (the vector space case we usually assume) or negative (so momentum space is hyperbolic).

This geometrization of what had been algebraic is somewhat analogous to what happened with velocities (i.e. vectors in spacetime)) when the theory of special relativity came along. Insisting that the “invariant” scale  be the same in every reference system meant that the addition of velocities ceased to be linear. At least, it did if you assume that adding velocities has an interpretation along the lines of: “first, from rest, add velocity v to your motion; then, from that reference frame, add velocity w”. While adding spacetime vectors still worked the same way, one had to rephrase this rule if we think of adding velocities as observed within a given reference frame – this became

be the same in every reference system meant that the addition of velocities ceased to be linear. At least, it did if you assume that adding velocities has an interpretation along the lines of: “first, from rest, add velocity v to your motion; then, from that reference frame, add velocity w”. While adding spacetime vectors still worked the same way, one had to rephrase this rule if we think of adding velocities as observed within a given reference frame – this became  (scaling so

(scaling so  and assuming the velocities are in the same direction). When velocities are small relative to

and assuming the velocities are in the same direction). When velocities are small relative to  , this looks roughly like linear addition. Geometrizing the algebra of momentum space is thought of a little differently, but similar things can be said: we think operationally in terms of combining momenta by some process. First transfer (group-valued) momentum

, this looks roughly like linear addition. Geometrizing the algebra of momentum space is thought of a little differently, but similar things can be said: we think operationally in terms of combining momenta by some process. First transfer (group-valued) momentum  to a particle, then momentum

to a particle, then momentum  – the connection on momentum space tells us how to translate these momenta into the “reference frame” of a new observer with momentum shifted relative to the starting point. Here again, the special momentum scale

– the connection on momentum space tells us how to translate these momenta into the “reference frame” of a new observer with momentum shifted relative to the starting point. Here again, the special momentum scale  (which is also a mass scale since a momentum has a corresponding kinetic energy) is a “deformation” parameter – for momenta that are small compared to this scale, things seem to work linearly as usual.

(which is also a mass scale since a momentum has a corresponding kinetic energy) is a “deformation” parameter – for momenta that are small compared to this scale, things seem to work linearly as usual.

There’s some discussion in the paper which relates this to DSR (either “doubly” or “deformed” special relativity), which is another postulated limit of quantum gravity, a variation of SR with both a special velocity and a special mass/momentum scale, to consider “what SR looks like near the Planck scale”, which treats spacetime as a noncommutative space, and generalizes the Lorentz group to a Hopf algebra which is a deformation of it. In DSR, the noncommutativity of “position space” is directly related to curvature of momentum space. In the “relative locality” view, we accept a classical phase space, but not a classical spacetime within it.

Physical Implications

We should understand this scale as telling us where “quantum gravity effects” should start to become visible in particle interactions. This is a fairly large scale for subatomic particles. The Planck mass as usually given is about 21 micrograms: small for normal purposes, about the size of a small sand grain, but very large for subatomic particles. Converting to momentum units with  , this is about 6 kg m/s: on the order of the momentum of a kicked soccer ball or so. For a subatomic particle this is a lot.

, this is about 6 kg m/s: on the order of the momentum of a kicked soccer ball or so. For a subatomic particle this is a lot.

This scale does raise a question for many people who first hear this argument, though – that quantum gravity effects should become apparent around the Planck mass/momentum scale, since macro-objects like the aforementioned soccer ball still seem to have linearly-additive momenta. Laurent explained the problem with this intuition. For interactions of big, extended, but composite objects like soccer balls, one has to calculate not just one interaction, but all the various interactions of their parts, so the “effective” mass scale where the deformation would be seen becomes  where

where  is the number of particles in the soccer ball. Roughly, the point is that a soccer ball is not a large “thing” for these purposes, but a large conglomeration of small “things”, whose interactions are “fundamental”. The “effective” mass scale tells us how we would have to alter the physical constants to be able to treat it as a “thing”. (This is somewhat related to the question of “effective actions” and renormalization, though these are a bit more complicated.)

is the number of particles in the soccer ball. Roughly, the point is that a soccer ball is not a large “thing” for these purposes, but a large conglomeration of small “things”, whose interactions are “fundamental”. The “effective” mass scale tells us how we would have to alter the physical constants to be able to treat it as a “thing”. (This is somewhat related to the question of “effective actions” and renormalization, though these are a bit more complicated.)

There are a number of possible experiments suggested in the paper, which Laurent mentioned in the talk. One involves a kind of “twin paradox” taking place in momentum space. In “spacetime”, a spaceship travelling a large loop at high velocity will arrive where it started having experienced less time than an observer who remained there (because of the Lorentzian metric) – and a dual phenomenon in momentum space says that particles travelling through loops (also in momentum space) should arrive displaced in space because of the relativity of localization. This could be observed in particle accelerators where particles make several transits of a loop, since the effect is cumulative. Another effect could be seen in astronomical observations: if an observer is observing some distant object via photons of different wavelengths (hence momenta), she might “localize” the object differently – that is, the two photons travel at “the same speed” the whole way, but arrive at different times because the observer will interpret the object as being at two different distances for the two photons.

This last one is rather weird, and I had to ask how one would distinguish this effect from a variable speed of light (predicted by certain other ideas about quantum gravity). How to distinguish such effects seems to be not quite worked out yet, but at least this is an indication that there are new, experimentally detectible, effects predicted by this “relative locality” principle. As Laurent emphasized, once we’ve noticed that not accepting this principle means making an a priori assumption about the geometry of momentum space (even if only in some particular approximation, or limit, of a true theory of quantum gravity), we’re pretty much obliged to stop making that assumption and do the experiments. Finding our assumptions were right would simply be revealing which momentum space geometry actually obtains in the approximation we’re studying.

A final note about the physical interpretation: this “relative locality” principle can be discovered by looking (in the relevant limit) at a Lagrangian for free particles, with interactions described in terms of momenta. It so happens that one can describe this without referencing a “real” spacetime: the part of the action that allows particles to interact when “close” only needs coordinate functions, which can certainly exist here, but are an observer-dependent construct. The conservation of (non-linear) momenta is specified via a Lagrange multiplier. The whole Lagrangian formalism for the mechanics of colliding particles works without reference to spacetime. Now, even though all the interactions (specified by the conservation of momentum terms) happen “at one location”, in that there will be an observer who sees them happening in the momentum space of her own location. But an observer at a different point may disagree about whether the interaction was local – i.e. happened at a single point in spacetime. Thus “relativity of localization”.

Again, this is no more bizarre (mathematically) than the fact that distant, relatively moving, observers in special relativity might disagree about simultaneity, whether two events happened at the same time. They have their own coordinates on spacetime, and transferring between them mixes space coordinates and time coordinates, so they’ll disagree whether the time-coordinate values of two events are the same. Similarly, in this phase-space picture, two different observers each have a coordinate system for splitting phase space into “spacetime” and “energy-momentum” coordinates, but switching between them may mix these two pieces. Thus, the two observers will disagree about whether the spacetime-coordinate values for the different interacting particles are the same. And so, one observer says the interaction is “local in spacetime”, and the other says it’s not. The point is that it’s local for the particles themselves (thinking of them as observers). All that’s going on here is the not-very-astonishing fact that in the conventional picture, we have no problem with interactions being nonlocal in momentum space (particles with very different momenta can interact as long as they collide with each other)… combined with the inability to globally and invariantly distinguish position and momentum coordinates.

What this means, philosophically, can be debated, but it does offer some plausibility to the claim that space and time are auxiliary, conceptual additions to what we actually experience, which just account for the relations between bits of matter. These concepts can be dispensed with even where we have a classical-looking phase space rather than Hilbert space (where, presumably, this is even more true).

…

Edit: On a totally unrelated note, I just noticed this post by Alex Hoffnung over at the n-Category Cafe which gives a lot of detail on issues relating to spans in bicategories that I had begun to think more about recently in relation to developing a higher-gauge-theoretic version of the construction I described for ETQFT. In particular, I’d been thinking about how the 2-group analog of restriction and induction for representations realizes the various kinds of duality properties, where we have adjunctions, biadjunctions, and so forth, in which units and counits of the various adjunctions have further duality. This observation seems to be due to Jim Dolan, as far as I can see from a brief note in HDA II. In that case, it’s really talking about the star-structure of the span (tri)category, but looking at the discussion Alex gives suggests to me that this theme shows up throughout this subject. I’ll have to take a closer look at the draft paper he linked to and see if there’s more to say…

March 24, 2011

As usual, this write-up process has been taking a while since life does intrude into blogging for some reason. In this case, because for a little less than a week, my wife and I have been on our honeymoon, which was delayed by our moving to Lisbon. We went to the Azores, or rather to São Miguel, the largest of the nine islands. We had a good time, roughly like so:

Now that we’re back, I’ll attempt to wrap up with the summaries of things discussed at the workshop on Higher Gauge Theory, TQFT, and Quantum Gravity. In the previous post I described talks which I roughly gathered under TQFT and Higher Gauge Theory, but the latter really ramifies out in a few different ways. As began to be clear before, higher bundles are classified by higher cohomology of manifolds, and so are gerbes – so in fact these are two slightly different ways of talking about the same thing. I also remarked, in the summary of Konrad Waldorf’s talk, the idea that the theory of gerbes on a manifold is equivalent to ordinary gauge theory on its loop space – which is one way to make explicit the idea that categorification “raises dimension”, in this case from parallel transport of points to that of 1-dimensional loops. Next we’ll expand on that theme, and then finally reach the “Quantum Gravity” part, and draw the connection between this and higher gauge theory toward the end.

Gerbes and Cohomology

The very first workshop speaker, in fact, was Paolo Aschieri, who has done a lot of work relating noncommutative geometry and gravity. In this case, though, he was talking about noncommutative gerbes, and specifically referred to this work with some of the other speakers. To be clear, this isn’t about gerbes with noncommutative group  , but about gerbes on noncommutative spaces. To begin with, it’s useful to express gerbes in the usual sense in the right language. In particular, he explain what a gerbe on a manifold

, but about gerbes on noncommutative spaces. To begin with, it’s useful to express gerbes in the usual sense in the right language. In particular, he explain what a gerbe on a manifold  is in concrete terms, giving Hitchin’s definition (viz). A

is in concrete terms, giving Hitchin’s definition (viz). A  gerbe can be described as “a cohomology class” but it’s more concrete to present it as:

gerbe can be described as “a cohomology class” but it’s more concrete to present it as:

- a collection of line bundles

associated with double overlaps

associated with double overlaps  . Note this gets an algebraic structure (multiplication

. Note this gets an algebraic structure (multiplication  of bundles is pointwise

of bundles is pointwise  , with an inverse given by the dual,

, with an inverse given by the dual,  , so we can require…

, so we can require…

, which helps define…

, which helps define…- transition functions

on triple overlaps

on triple overlaps  , which are sections of

, which are sections of  . If this product is trivial, there’d be a 1-cocycle condition here, but we only insist on the 2-cocycle condition…

. If this product is trivial, there’d be a 1-cocycle condition here, but we only insist on the 2-cocycle condition…

This is a  -gerbe on a commutative space. The point is that one can make a similar definition for a noncommutative space. If the space

-gerbe on a commutative space. The point is that one can make a similar definition for a noncommutative space. If the space  is associated with the algebra

is associated with the algebra  of smooth functions, then a line bundle is a module for

of smooth functions, then a line bundle is a module for  , so if

, so if  is noncommutative (thought of as a “space”

is noncommutative (thought of as a “space”  ), a “bundle over

), a “bundle over  is just defined to be an

is just defined to be an  -module. One also has to define an appropriate “covariant derivative” operator

-module. One also has to define an appropriate “covariant derivative” operator  on this module, and the

on this module, and the  -product must be defined as well, and will be noncommutative (we can think of it as a deformation of the

-product must be defined as well, and will be noncommutative (we can think of it as a deformation of the  above). The transition functions are sections: that is, elements of the modules in question. his means we can describe a gerbe in terms of a big stack of modules, with a chosen algebraic structure, together with some elements. The idea then is that gerbes can give an interpretation of cohomology of noncommutative spaces as well as commutative ones.

above). The transition functions are sections: that is, elements of the modules in question. his means we can describe a gerbe in terms of a big stack of modules, with a chosen algebraic structure, together with some elements. The idea then is that gerbes can give an interpretation of cohomology of noncommutative spaces as well as commutative ones.

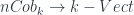

Mauro Spera spoke about a point of view of gerbes based on “transgressions”. The essential point is that an  -gerbe on a space

-gerbe on a space  can be seen as the obstruction to patching together a family of

can be seen as the obstruction to patching together a family of  -gerbes. Thus, for instance, a

-gerbes. Thus, for instance, a  0-gerbe is a

0-gerbe is a  -bundle, which is to say a complex line bundle. As described above, a 1-gerbe can be understood as describing the obstacle to patching together a bunch of line bundles, and the obstacle is the ability to find a cocycle

-bundle, which is to say a complex line bundle. As described above, a 1-gerbe can be understood as describing the obstacle to patching together a bunch of line bundles, and the obstacle is the ability to find a cocycle  satisfying the requisite conditions. This obstacle is measured by the cohomology of the space. Saying we want to patch together

satisfying the requisite conditions. This obstacle is measured by the cohomology of the space. Saying we want to patch together  -gerbes on the fibre. He went on to discuss how this manifests in terms of obstructions to string structures on manifolds (already discussed at some length in the post on Hisham Sati’s school talk, so I won’t duplicate here).

-gerbes on the fibre. He went on to discuss how this manifests in terms of obstructions to string structures on manifolds (already discussed at some length in the post on Hisham Sati’s school talk, so I won’t duplicate here).

A talk by Igor Bakovic, “Stacks, Gerbes and Etale Groupoids”, gave a way of looking at gerbes via stacks (see this for instance). The organizing principle is the classification of bundles by the space maps into a classifying space – or, to get the category of principal  -bundles on, the category

-bundles on, the category  , where

, where  is the category of sheaves on

is the category of sheaves on  and

and  is the classifying topos of

is the classifying topos of  -sets. (So we have geometric morphisms between the toposes as the objects.) Now, to get further into this, we use that

-sets. (So we have geometric morphisms between the toposes as the objects.) Now, to get further into this, we use that  is equivalent to the category of Étale spaces over

is equivalent to the category of Étale spaces over  – this is a refinement of the equivalence between bundles and presheaves. Taking stalks of a presheaf gives a bundle, and taking sections of a bundle gives a presheaf – and these operations are adjoint.

– this is a refinement of the equivalence between bundles and presheaves. Taking stalks of a presheaf gives a bundle, and taking sections of a bundle gives a presheaf – and these operations are adjoint.

The issue at hand is how to categorify this framework to talk about 2-bundles, and the answer is there’s a 2-adjunction between the 2-category  of such things, and

of such things, and ![Fib(X) = [\mathcal{O}(X)^{op},Cat]](https://s0.wp.com/latex.php?latex=Fib%28X%29+%3D+%5B%5Cmathcal%7BO%7D%28X%29%5E%7Bop%7D%2CCat%5D&bg=ffffff&fg=29303b&s=0&c=20201002) , the 2-category of fibred categories over

, the 2-category of fibred categories over  . (That is, instead of looking at “sheaves of sets”, we look at “sheaves of categories” here.) The adjunction, again, involves talking stalks one way, and taking sections the other way. One hard part of this is getting a nice definition of “stalk” for stacks (i.e. for the “sheaves of categories”), and a good part of the talk focused on explaining how to get a nice tractable definition which is (fibre-wise) equivalent to the more natural one.

. (That is, instead of looking at “sheaves of sets”, we look at “sheaves of categories” here.) The adjunction, again, involves talking stalks one way, and taking sections the other way. One hard part of this is getting a nice definition of “stalk” for stacks (i.e. for the “sheaves of categories”), and a good part of the talk focused on explaining how to get a nice tractable definition which is (fibre-wise) equivalent to the more natural one.

Bakovic did a bunch of this work with Branislav Jurco, who was also there, and spoke about “Nonabelian Bundle 2-Gerbes“. The paper behind that link has more details, which I’ve yet to entirely absorb, but the essential point appears to be to extend the description of “bundle gerbes” associated to crossed modules up to 2-crossed modules. Bundles, with a structure-group  , are classified by the cohomology

, are classified by the cohomology  with coefficients in

with coefficients in  ; and whereas “bundle-gerbes” with a structure-crossed-module

; and whereas “bundle-gerbes” with a structure-crossed-module  can likewise be described by cohomology

can likewise be described by cohomology  . Notice this is a bit different from the description in terms of higher cohomology

. Notice this is a bit different from the description in terms of higher cohomology  for a

for a  -gerbe, which can be understood as a bundle-gerbe using the shifted crossed module

-gerbe, which can be understood as a bundle-gerbe using the shifted crossed module  (when

(when  is abelian. The goal here is to generalize this part to nonabelian groups, and also pass up to “bundle 2-gerbes” based on a 2-crossed module, or crossed complex of length 2,

is abelian. The goal here is to generalize this part to nonabelian groups, and also pass up to “bundle 2-gerbes” based on a 2-crossed module, or crossed complex of length 2,  as I described previously for Joao Martins’ talk. This would be classified in terms of cohomology valued in the 2-crossed module. The point is that one can describe such a thing as a bundle over a fibre product, which (I think – I’m not so clear on this part) deals with the same structure of overlaps as the higher cohomology in the other way of describing things.

as I described previously for Joao Martins’ talk. This would be classified in terms of cohomology valued in the 2-crossed module. The point is that one can describe such a thing as a bundle over a fibre product, which (I think – I’m not so clear on this part) deals with the same structure of overlaps as the higher cohomology in the other way of describing things.

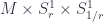

Finally, a talk that’s a little harder to classify than most, but which I’ve put here with things somewhat related to string theory, was Alexander Kahle‘s on “T-Duality and Differential K-Theory”, based on work with Alessandro Valentino. This uses the idea of the differential refinement of cohomology theories – in this case, K-theory, which is a generalized cohomology theory, which is to say that K-theory satisfies the Eilenberg-Steenrod axioms (with the dimension axiom relaxed, hence “generalized”). Cohomology theories, including generalized ones, can have differential refinements, which pass from giving topological to geometrical information about a space. So, while K-theory assigns to a space the Grothendieck ring of the category of vector bundles over it, the differential refinement of K-theory does the same with the category of vector bundles with connection. This captures both local and global structures, which turns out to be necessary to describe fields in string theory – specifically, Ramond-Ramond fields. The point of this talk was to describe what happens to these fields under T-duality. This is a kind of duality in string theory between a theory with large strings and small strings. The talk describes how this works, where we have a manifold with fibres at each point  with fibres strings of radius

with fibres strings of radius  and

and  with radius

with radius  . There’s a correspondence space

. There’s a correspondence space  , which has projection maps down into the two situations. Fields, being forms on such a fibration, can be “transferred” through this correspondence space by a “pull-back and push-forward” (with, in the middle, a wedge with a form that mixes the two directions,

, which has projection maps down into the two situations. Fields, being forms on such a fibration, can be “transferred” through this correspondence space by a “pull-back and push-forward” (with, in the middle, a wedge with a form that mixes the two directions,  ). But to be physically the right kind of field, these “forms” actually need to be representing cohomology classes in the differential refinement of K-theory.

). But to be physically the right kind of field, these “forms” actually need to be representing cohomology classes in the differential refinement of K-theory.

Quantum Gravity etc.

Now, part of the point of this workshop was to try to build, or anyway maintain, some bridges between the kind of work in geometry and topology which I’ve been describing and the world of physics. There are some particular versions of physical theories where these ideas have come up. I’ve already touched on string theory along the way (there weren’t many talks about it from a physicist’s point of view), so this will mostly be about a different sort of approach.

Benjamin Bahr gave a talk outlining this approach for our mathematician-heavy audience, with his talk on “Spin Foam Operators” (see also for instance this paper). The point is that one approach to quantum gravity has a theory whose “kinematics” (the description of the state of a system at a given time) is described by “spin networks” (based on  gauge theory), as described back in the pre-school post. These span a Hilbert space, so the “dynamical” issue of such models is how to get operators between Hilbert spaces from “foams” that interpolate between such networks – that is, what kind of extra data they might need, and how to assign amplitudes to faces and edges etc. to define an operator, which (assuming a “local” theory where distant parts of the foam affect the result independently) will be of the form:

gauge theory), as described back in the pre-school post. These span a Hilbert space, so the “dynamical” issue of such models is how to get operators between Hilbert spaces from “foams” that interpolate between such networks – that is, what kind of extra data they might need, and how to assign amplitudes to faces and edges etc. to define an operator, which (assuming a “local” theory where distant parts of the foam affect the result independently) will be of the form:

where  is a particular complex (foam),

is a particular complex (foam),  is a way of assigning irreps to faces of the foam, and

is a way of assigning irreps to faces of the foam, and  is the assignment of intertwiners to edges. Later on, one can take a discrete version of a path integral by summing over all these

is the assignment of intertwiners to edges. Later on, one can take a discrete version of a path integral by summing over all these  . Here we have a product over faces and one over vertices, with an amplitude

. Here we have a product over faces and one over vertices, with an amplitude  assigned (somehow – this is the issue) to faces. The trace is over all the representation spaces assigned to the edges that are incident to a vertex (this is essentially the only consistent way to assign an amplitude to a vertex). If we also consider spacetimes with boundary, we need some amplitudes

assigned (somehow – this is the issue) to faces. The trace is over all the representation spaces assigned to the edges that are incident to a vertex (this is essentially the only consistent way to assign an amplitude to a vertex). If we also consider spacetimes with boundary, we need some amplitudes  at the boundary edges, as well. A big part of the work with such models is finding such amplitudes that meet some nice conditions.

at the boundary edges, as well. A big part of the work with such models is finding such amplitudes that meet some nice conditions.

Some of these conditions are inherently necessary – to ensure the theory is invariant under gauge transformations, or (formally) changing orientations of faces. Others are considered optional, though to me “functoriality” (that the way of deriving operators respects the gluing-together of foams) seems unavoidable – it imposes that the boundary amplitudes have to be found from the  in one specific way. Some other nice conditions might be: that

in one specific way. Some other nice conditions might be: that  depends only on the topology of

depends only on the topology of  (which demands that the

(which demands that the  operators be projections); that

operators be projections); that  is invariant under subdivision of the foam (which implies the amplitudes have to be

is invariant under subdivision of the foam (which implies the amplitudes have to be  ).

).

Assuming all these means the only choice is exactly which sub-projection  is of the projection onto the gauge-invariant part of the representation space for the faces attached to edge

is of the projection onto the gauge-invariant part of the representation space for the faces attached to edge  . The rest of the talk discussed this, including some examples (models for BF-theory, the Barrett-Crane model and the more recent EPRL/FK model), and finished up by discussing issues about getting a nice continuum limit by way of “coarse graining”.

. The rest of the talk discussed this, including some examples (models for BF-theory, the Barrett-Crane model and the more recent EPRL/FK model), and finished up by discussing issues about getting a nice continuum limit by way of “coarse graining”.

On a related subject, Bianca Dittrich spoke about “Dynamics and Diffeomorphism Symmetry in Discrete Quantum Gravity”, which explained the nature of some of the hard problems with this sort of discrete model of quantum gravity. She began by asking what sort of models (i.e. which choices of amplitudes) in such discrete models would actually produce a nice continuum theory – since gravity, classically, is described in terms of spacetimes which are continua, and the quantum theory must look like this in some approximation. The point is to think of these as “coarse-graining” of a very fine (perfect, in the limit) approximation to the continuum by a triangulation with a very short length-scale for the edges. Coarse graining means discarding some of the edges to get a coarser approximation (perhaps repeatedly). If the  happens to be triangulation-independent, then coarse graining makes no difference to the result, nor does the converse process of refining the triangulation. So one question is: if we expect the continuum limit to be diffeomorphism invariant (as is General Relativity), what does this say at the discrete level? The relation between diffeomorphism invariance and triangulation invariance has been described by Hendryk Pfeiffer, and in the reverse direction by Dittrich et al.

happens to be triangulation-independent, then coarse graining makes no difference to the result, nor does the converse process of refining the triangulation. So one question is: if we expect the continuum limit to be diffeomorphism invariant (as is General Relativity), what does this say at the discrete level? The relation between diffeomorphism invariance and triangulation invariance has been described by Hendryk Pfeiffer, and in the reverse direction by Dittrich et al.

Actually constructing the dynamics for a system like this in a nice way (“canonical dynamics with anomaly-free constraints”) is still a big problem, which Bianca suggested might be approached by this coarse-graining idea. Now, if a theory is topological (here we get the link to TQFT), such as electromagnetism in 2D, or (linearized) gravity in 3D, coarse graining doesn’t change much. But otherwise, changing the length scale means changing the action for the continuum limit of the theory. This is related to renormalization: one starts with a “naive” guess at a theory, then refines it (in this case, by the coarse-graining process), which changes the action for the theory, until arriving at (or approximating to) a fixed point. Bianca showed an example, which produces a really huge, horrible action full of very complicated terms, which seems rather dissatisfying. What’s more, she pointed out that, unless the theory is topological, this always produces an action which is non-local – unlike the “naive” discrete theory. That is, the action can’t be described in terms of a bunch of non-interacting contributions from the field at individual points – instead, it’s some function which couples the field values at distant points (albeit in a way that falls off exponentially as the points get further apart).

In a more specific talk, Aleksandr Mikovic discussed “Finiteness and Semiclassical Limit of EPRL-FK Spin Foam Models”, looking at a particular example of such models which is the (relatively) new-and-improved candidate for quantum gravity mentioned above. This was a somewhat technical talk, which I didn’t entirely follow, but roughly, the way he went at this was through the techniques of perturbative QFT. That is, by looking at the theory in terms of an “effective action”, instead of some path integral over histories  with action

with action  – which looks like

– which looks like  . Starting with some classical history

. Starting with some classical history  – a stationary point of the action

– a stationary point of the action  – the effective action

– the effective action  is an integral over small fluctuations

is an integral over small fluctuations  around it of

around it of  .

.

He commented more on the distinction between the question of triangulation independence (which is crucial for using spin foams to give invariants of manifolds) and the question of whether the theory gives a good quantum theory of gravity – that’s the “semiclassical limit” part. (In light of the above, this seems to amount to asking if “diffeomorphism invariance” really extends through to the full theory, or is only approximately true, in the limiting case). Then the “finiteness” part has to do with the question of getting decent asymptotic behaviour for some of those weights mentioned above so as to give a nice effective action (if not necessarily triangulation independence). So, for instance, in the Ponzano-Regge model (which gives a nice invariant for manifolds), the vertex amplitudes  are found by the 6j-symbols of representations. The asymptotics of the 6j symbols then becomes an issue – Alekandr noted that to get a theory with a nice effective action, those 6j-symbols need to be scaled by a certain factor. This breaks triangulation independence (hence means we don’t have a good manifold invariant), but gives a physically nicer theory. In the case of 3D gravity, this is not what we want, but as he said, there isn’t a good a-priori reason to think it can’t give a good theory of 4D gravity.

are found by the 6j-symbols of representations. The asymptotics of the 6j symbols then becomes an issue – Alekandr noted that to get a theory with a nice effective action, those 6j-symbols need to be scaled by a certain factor. This breaks triangulation independence (hence means we don’t have a good manifold invariant), but gives a physically nicer theory. In the case of 3D gravity, this is not what we want, but as he said, there isn’t a good a-priori reason to think it can’t give a good theory of 4D gravity.

Now, making a connection between these sorts of models and higher gauge theory, Aristide Baratin spoke about “2-Group Representations for State Sum Models”. This is a project Baez, Freidel, and Wise, building on work by Crane and Sheppard (see my previous post, where Derek described the geometry of the representation theory for some 2-groups). The idea is to construct state-sum models where, at the kinematical level, edges are labelled by 2-group representations, faces by intertwiners, and tetrahedra by 2-intertwiners. (This assumes the foam is a triangulation – there’s a certain amount of back-and-forth in this area between this, and the Poincaré dual picture where we have 4-valent vertices). He discussed this in a couple of related cases – the Euclidean and Poincaré 2-groups, which are described by crossed modules with base groups  or

or  respectively, acting on the abelian group (of automorphisms of the identity)

respectively, acting on the abelian group (of automorphisms of the identity)  in the obvious way. Then the analogy of the 6j symbols above, which are assigned to tetrahedra (or dually, vertices in a foam interpolating two kinematical states), are now 10j symbols assigned to 4-simplexes (or dually, vertices in the foam).

in the obvious way. Then the analogy of the 6j symbols above, which are assigned to tetrahedra (or dually, vertices in a foam interpolating two kinematical states), are now 10j symbols assigned to 4-simplexes (or dually, vertices in the foam).

One nice thing about this setup is that there’s a good geometric interpretation of the kinematics – irreducible representations of these 2-groups pick out orbits of the action of the relevant  on

on  . These are “mass shells” – radii of spheres in the Euclidean case, or proper length/time values that pick out hyperboloids in the Lorentzian case of

. These are “mass shells” – radii of spheres in the Euclidean case, or proper length/time values that pick out hyperboloids in the Lorentzian case of  . Assigning these to edges has an obvious geometric meaning (as a proper length of the edge), which thus has a continuous spectrum. The areas and volumes interpreting the intertwiners and 2-intertwiners start to exhibit more of the discreteness you see in the usual formulation with representations of the

. Assigning these to edges has an obvious geometric meaning (as a proper length of the edge), which thus has a continuous spectrum. The areas and volumes interpreting the intertwiners and 2-intertwiners start to exhibit more of the discreteness you see in the usual formulation with representations of the  groups themselves. Finally, Aristide pointed out that this model originally arose not from an attempt to make a quantum gravity model, but from looking at Feynman diagrams in flat space (a sort of “quantum flat space” model), which is suggestively interesting, if not really conclusively proving anything.

groups themselves. Finally, Aristide pointed out that this model originally arose not from an attempt to make a quantum gravity model, but from looking at Feynman diagrams in flat space (a sort of “quantum flat space” model), which is suggestively interesting, if not really conclusively proving anything.

Finally, Laurent Freidel gave a talk, “Classical Geometry of Spin Network States” which was a way of challenging the idea that these states are exclusively about “quantum geometries”, and tried to give an account of how to interpret them as discrete, but classical. That is, the quantization of the classical phase space  (the cotangent bundle of connections-mod-gauge) involves first a discretization to a spin-network phase space

(the cotangent bundle of connections-mod-gauge) involves first a discretization to a spin-network phase space  , and then a quantization to get a Hilbert space

, and then a quantization to get a Hilbert space  , and the hard part is the first step. The point is to see what the classical phase space is, and he describes it as a (symplectic) quotient

, and the hard part is the first step. The point is to see what the classical phase space is, and he describes it as a (symplectic) quotient  , which starts by assigning $T^*(SU(2))$ to each edge, then reduced by gauge transformations. The puzzle is to interpret the states as geometries with some discrete aspect.

, which starts by assigning $T^*(SU(2))$ to each edge, then reduced by gauge transformations. The puzzle is to interpret the states as geometries with some discrete aspect.

The answer is that one thinks of edges as describing (dual) faces, and vertices as describing some polytopes. For each  , there’s a

, there’s a  -dimensional “shape space” of convex polytopes with

-dimensional “shape space” of convex polytopes with  -faces and a given fixed area

-faces and a given fixed area  . This has a canonical symplectic structure, where lengths and interior angles at an edge are the canonically conjugate variables. Then the whole phase space describes ways of building geometries by gluing these things (associated to vertices) together at the corresponding faces whenever the two vertices are joined by an edge. Notice this is a bit strange, since there’s no particular reason the faces being glued will have the same shape: just the same area. An area-1 pentagon and an area-1 square associated to the same edge could be glued just fine. Then the classical geometry for one of these configurations is build of a bunch of flat polyhedra (i.e. with a flat metric and connection on them). Measuring distance across a face in this geometry is a little strange. Given two points inside adjacent cells, you measure orthogonal distance to the matched faces, and add in the distance between the points you arrive at (orthogonally) – assuming you glued the faces at the centre. This is a rather ugly-seeming geometry, but it’s symplectically isomorphic to the phase space of spin network states – so it’s these classical geometries that spin-foam QG is a quantization of. Maybe the ugliness should count against this model of quantum gravity – or maybe my aesthetic sense just needs work.

. This has a canonical symplectic structure, where lengths and interior angles at an edge are the canonically conjugate variables. Then the whole phase space describes ways of building geometries by gluing these things (associated to vertices) together at the corresponding faces whenever the two vertices are joined by an edge. Notice this is a bit strange, since there’s no particular reason the faces being glued will have the same shape: just the same area. An area-1 pentagon and an area-1 square associated to the same edge could be glued just fine. Then the classical geometry for one of these configurations is build of a bunch of flat polyhedra (i.e. with a flat metric and connection on them). Measuring distance across a face in this geometry is a little strange. Given two points inside adjacent cells, you measure orthogonal distance to the matched faces, and add in the distance between the points you arrive at (orthogonally) – assuming you glued the faces at the centre. This is a rather ugly-seeming geometry, but it’s symplectically isomorphic to the phase space of spin network states – so it’s these classical geometries that spin-foam QG is a quantization of. Maybe the ugliness should count against this model of quantum gravity – or maybe my aesthetic sense just needs work.

(Laurent also gave another talk, which was originally scheduled as one of the school talks, but ended up being a very interesting exposition of the principle of “Relativity of Localization”, which is hard to shoehorn into the themes I’ve used here, and was anyway interesting enough that I’ll devote a separate post to it.)

March 17, 2011

Now for a more sketchy bunch of summaries of some talks presented at the HGTQGR workshop. I’ll organize this into a few themes which appeared repeatedly and which roughly line up with the topics in the title: in this post, variations on TQFT, plus 2-group and higher forms of gauge theory; in the next post, gerbes and cohomology, plus talks on discrete models of quantum gravity and suchlike physics.

TQFT and Variations

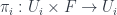

I start here for no better reason than the personal one that it lets me put my talk first, so I’m on familiar ground to start with, for which reason also I’ll probably give more details here than later on. So: a TQFT is a linear representation of the category of cobordisms – that is, a (symmetric monoidal) functor  , in the notation I mentioned in the first school post. An Extended TQFT is a higher functor

, in the notation I mentioned in the first school post. An Extended TQFT is a higher functor  , representing a category of cobordisms with corners into a higher category of k-Vector spaces (for some definition of same). The essential point of my talk is that there’s a universal construction that can be used to build one of these at

, representing a category of cobordisms with corners into a higher category of k-Vector spaces (for some definition of same). The essential point of my talk is that there’s a universal construction that can be used to build one of these at  , which relies on some way of representing

, which relies on some way of representing  into

into  , whose objects are groupoids, and whose morphisms in

, whose objects are groupoids, and whose morphisms in  are pairs of groupoid homomorphisms

are pairs of groupoid homomorphisms  . The 2-morphisms have an analogous structure. The point is that there’s a 2-functor

. The 2-morphisms have an analogous structure. The point is that there’s a 2-functor  which is takes representations of groupoids, at the level of objects; for morphisms, there is a “pull-push” operation that just uses the restricted and induced representation functors to move a representation across a span; the non-trivial (but still universal) bit is the 2-morphism map, which uses the fact that the restriction and induction functors are bi-ajdoint, so there are units and counits to use. A construction using gauge theory gives groupoids of connections and gauge transformations for each manifold or cobordism. This recovers a form of the Dijkgraaf-Witten model. In principle, though, any way of getting a groupoid (really, a stack) associated to a space functorially will give an ETQFT this way. I finished up by suggesting what would need to be done to extend this up to higher codimension. To go to codimension 3, one would assign an object (codimension-3 manifold) a 3-vector space which is a representation 2-category of 2-groupoids of connections valued in 2-groups, and so on. There are some theorems about representations of n-groupoids which would need to be proved to make this work.

which is takes representations of groupoids, at the level of objects; for morphisms, there is a “pull-push” operation that just uses the restricted and induced representation functors to move a representation across a span; the non-trivial (but still universal) bit is the 2-morphism map, which uses the fact that the restriction and induction functors are bi-ajdoint, so there are units and counits to use. A construction using gauge theory gives groupoids of connections and gauge transformations for each manifold or cobordism. This recovers a form of the Dijkgraaf-Witten model. In principle, though, any way of getting a groupoid (really, a stack) associated to a space functorially will give an ETQFT this way. I finished up by suggesting what would need to be done to extend this up to higher codimension. To go to codimension 3, one would assign an object (codimension-3 manifold) a 3-vector space which is a representation 2-category of 2-groupoids of connections valued in 2-groups, and so on. There are some theorems about representations of n-groupoids which would need to be proved to make this work.

The fact that different constructions can give groupoids for spaces was used by the next speaker, Thomas Nicklaus, whose talk described another construction that uses the  I mentioned above. This one produces “Equivariant Dijkgraaf-Witten Theory”. The point is that one gets groupoids for spaces in a new way. Before, we had, for a space

I mentioned above. This one produces “Equivariant Dijkgraaf-Witten Theory”. The point is that one gets groupoids for spaces in a new way. Before, we had, for a space  a groupoid

a groupoid  whose objects are

whose objects are  -connections (or, put another way, bundles-with-connection) and whose morphisms are gauge transformations. Now we suppose that there’s some group

-connections (or, put another way, bundles-with-connection) and whose morphisms are gauge transformations. Now we suppose that there’s some group  which acts weakly (i.e. an action defined up to isomorphism) on

which acts weakly (i.e. an action defined up to isomorphism) on  . We think of this as describing “twisted bundles” over

. We think of this as describing “twisted bundles” over  . This is described by a quotient stack

. This is described by a quotient stack  (which, as a groupoid, gets some extra isomorphisms showing where two objects are related by the

(which, as a groupoid, gets some extra isomorphisms showing where two objects are related by the  -action). So this gives a new map

-action). So this gives a new map  , and applying

, and applying  gives a TQFT. The generating objects for the resulting 2-vector space are “twisted sectors” of the equivariant DW model. There was some more to the talk, including a description of how the DW model can be further mutated using a cocycle in the group cohomology of

gives a TQFT. The generating objects for the resulting 2-vector space are “twisted sectors” of the equivariant DW model. There was some more to the talk, including a description of how the DW model can be further mutated using a cocycle in the group cohomology of  , but I’ll let you look at the slides for that.

, but I’ll let you look at the slides for that.

Next up was Jamie Vicary, who was talking about “(1,2,3)-TQFT”, which is another term for what I called “Extended” TQFT above, but specifying that the objects are 1-manifolds, the morphisms 2-manifolds, and the 2-morphisms are 3-manifolds. He was talking about a theorem that identifies oriented TQFT’s of this sort with “anomaly-free modular tensor categories” – which is widely believed, but in fact harder than commonly thought. It’s easy enough that such a TQFT  corresponds to a MTC – it’s the category

corresponds to a MTC – it’s the category  assigned to the circle. What’s harder is showing that the TQFT’s are equivalent functors iff the categories are equivalent. This boils down, historically, to the difficulty of showing the category is rigid. Jamie was talking about a project with Bruce Bartlett and Chris Schommer-Pries, whose presentation of the cobordism category (described in the school post) was the basis of their proof.

assigned to the circle. What’s harder is showing that the TQFT’s are equivalent functors iff the categories are equivalent. This boils down, historically, to the difficulty of showing the category is rigid. Jamie was talking about a project with Bruce Bartlett and Chris Schommer-Pries, whose presentation of the cobordism category (described in the school post) was the basis of their proof.

Part of it amounts to giving a description of the TQFT in terms of certain string diagrams. Jamie kindly credited me with describing this point of view to him: that the codimension-2 manifolds in a TQFT can be thought of as “boundaries in space” – codimension-1 manifolds are either time-evolving boundaries, or else slices of space in which the boundaries live; top-dimension cobordisms are then time-evolving slices of space-with-boundary. (This should be only a heuristic way of thinking – certainly a generic TQFT has no literal notion of “time-evolution”, though in that (2+1) quantum gravity can be seen as a TQFT, there’s at least one case where this picture could be taken literally.) Then part of their proof involves showing that the cobordisms can be characterized by taking vector spaces on the source and target manifolds spanned by the generating objects, and finding the functors assigned to cobordisms in terms of sums over all “string diagrams” (particle worldlines, if you like) bounded by the evolving boundaries. Jamie described this as a “topological path integral”. Then one has to describe the string diagram calculus – ridigidy follows from the “yanking” rule, for instance, and this follows from Morse theory as in Chris’ presentation of the cobordism category.

There was a little more discussion about what the various properties (proved in a similar way) imply. One is “cloaking” – the fact that a 2-morphism which “creates a handle” is invisible to the string diagrams in the sense that it introduces a sum over all diagrams with a string “looped” around the new handle, but this sum gives a result that’s equal to the original map (in any “pivotal” tensor category, as here).

Chronologically before all these, one of the first talks on such a topic was by Rafael Diaz, on Homological Quantum Field Theory, or HLQFT for short, which is a rather different sort of construction. Remember that Homotopy QFT, as described in my summary of Tim Porter’s school sessions, is about linear representations of what I’ll for now call  , whose morphisms are

, whose morphisms are  -dimensional cobordisms equipped with maps into a space

-dimensional cobordisms equipped with maps into a space  up to homotopy. HLQFT instead considers cobordisms equipped with maps taken up to homology.

up to homotopy. HLQFT instead considers cobordisms equipped with maps taken up to homology.

Specifically, there’s some space  , say a manifold, with some distinguished submanifolds (possibly boundary components; possibly just embedded submanifolds; possibly even all of

, say a manifold, with some distinguished submanifolds (possibly boundary components; possibly just embedded submanifolds; possibly even all of  for a degenerate case). Then we define

for a degenerate case). Then we define  to have objects which are

to have objects which are  -manifolds equipped with maps into

-manifolds equipped with maps into  which land on the distinguished submanifolds (to make composition work nicely, we in fact assume they map to a single point). Morphisms in

which land on the distinguished submanifolds (to make composition work nicely, we in fact assume they map to a single point). Morphisms in  are trickier, and look like

are trickier, and look like  : a cobordism

: a cobordism  in this category is likewise equipped with a map

in this category is likewise equipped with a map  from its boundary into

from its boundary into  which recovers the maps on its objects. That

which recovers the maps on its objects. That  is a homology class of maps from

is a homology class of maps from  to

to  , which agrees with

, which agrees with  . This forms a monoidal category as with standard cobordisms. Then HLQFT is about representations of this category. One simple case Rafael described is the dimension-1 case, where objects are (ordered sets of) points equipped with maps that pick out chosen submanifolds of

. This forms a monoidal category as with standard cobordisms. Then HLQFT is about representations of this category. One simple case Rafael described is the dimension-1 case, where objects are (ordered sets of) points equipped with maps that pick out chosen submanifolds of  , and morphisms are just braids equipped with homology classes of “paths” joining up the source and target submanifolds. Then a representation might, e.g., describe how to evolve a homology class on the starting manifold to one on the target by transporting along such a path-up-to-homology. In higher dimensions, the evolution is naturally more complicated.

, and morphisms are just braids equipped with homology classes of “paths” joining up the source and target submanifolds. Then a representation might, e.g., describe how to evolve a homology class on the starting manifold to one on the target by transporting along such a path-up-to-homology. In higher dimensions, the evolution is naturally more complicated.

A slightly looser fit to this section is the talk by Thomas Krajewski, “Quasi-Quantum Groups from Strings” (see this) – he was talking about how certain algebraic structures arise from “string worldsheets”, which are another way to describe cobordisms. This does somewhat resemble the way an algebraic structure (Frobenius algebra) is related to a 2D TQFT, but here the string worldsheets are interacting with 3-form field,  (the curvature of that 2-form field

(the curvature of that 2-form field  of string theory) and things needn’t be topological, so the result is somewhat different.

of string theory) and things needn’t be topological, so the result is somewhat different.

Part of the point is that quantizing such a thing gives a higher version of what happens for quantizing a moving particle in a gauge field. In the particle case, one comes up with a line bundle (of which sections form the Hilbert space) and in the string case one comes up with a gerbe; for the particle, this involves associated 2-cocycle, and for the string a 3-cocycle; for the particle, one ends up producing a twisted group algebra, and for the string, this is where one gets a “quasi-quantum group”. The algebraic structures, as in the TQFT situation, come from, for instance, the “pants” cobordism which gives a multiplication and a comultiplication (by giving maps  or the reverse, where

or the reverse, where  is the object assigned to a circle).

is the object assigned to a circle).

There is some machinery along the way which I won’t describe in detail, except that it involves a tricomplex of forms – the gradings being form degree, the degree of a cocycle for group cohomology, and the number of overlaps. As observed before, gerbes and their higher versions have transition functions on higher numbers of overlapping local neighborhoods than mere bundles. (See the paper above for more)

Higher Gauge Theory

The talks I’ll summarize here touch on various aspects of higher-categorical connections or 2-groups (though at least one I’ll put off until later). The division between this and the section on gerbes is a little arbitrary, since of course they’re deeply connected, but I’m making some judgements about emphasis or P.O.V. here.

Apart from giving lectures in the school sessions, John Huerta also spoke on “Higher Supergroups for String Theory”, which brings “super” (i.e.  -graded) objects into higher gauge theory. There are “super” versions of vector spaces and manifolds, which decompose into “even” and “odd” graded parts (a.k.a. “bosonic” and “fermionic” parts). Thus there are “super” variants of Lie algebras and Lie groups, which are like the usual versions, except commutation properties have to take signs into account (e.g. a Lie superalgebra’s bracket is commutative if the product of the grades of two vectors is odd, anticommutative if it’s even). Then there are Lie 2-algebras and 2-groups as well – categories internal to this setting. The initial question has to do with whether one can integrate some Lie 2-algebra structures to Lie 2-group structures on a spacetime, which depends on the existence of some globally smooth cocycles. The point is that when spacetime is of certain special dimensions, this can work, namely dimensions 3, 4, 6, and 10. These are all 2 more than the real dimensions of the four real division algebras,

-graded) objects into higher gauge theory. There are “super” versions of vector spaces and manifolds, which decompose into “even” and “odd” graded parts (a.k.a. “bosonic” and “fermionic” parts). Thus there are “super” variants of Lie algebras and Lie groups, which are like the usual versions, except commutation properties have to take signs into account (e.g. a Lie superalgebra’s bracket is commutative if the product of the grades of two vectors is odd, anticommutative if it’s even). Then there are Lie 2-algebras and 2-groups as well – categories internal to this setting. The initial question has to do with whether one can integrate some Lie 2-algebra structures to Lie 2-group structures on a spacetime, which depends on the existence of some globally smooth cocycles. The point is that when spacetime is of certain special dimensions, this can work, namely dimensions 3, 4, 6, and 10. These are all 2 more than the real dimensions of the four real division algebras,  ,

,  ,

,  and

and  . It’s in these dimensions that Lie 2-superalgebras can be integrated to Lie 2-supergroups. The essential reason is that a certain cocycle condition will hold because of the properties of a form on the Clifford algebras that are associated to the division algebras. (John has some related material here and here, though not about the 2-group case.)

. It’s in these dimensions that Lie 2-superalgebras can be integrated to Lie 2-supergroups. The essential reason is that a certain cocycle condition will hold because of the properties of a form on the Clifford algebras that are associated to the division algebras. (John has some related material here and here, though not about the 2-group case.)

Since we’re talking about higher versions of Lie groups/algebras, an important bunch of concepts to categorify are those in representation theory. Derek Wise spoke on “2-Group Representations and Geometry”, based on work with Baez, Baratin and Freidel, most fully developed here, but summarized here. The point is to describe the representation theory of Lie 2-groups, in particular geometrically. They’re to be represented on (in general, infinite-dimensional) 2-vector spaces of some sort, which is chosen to be a category of measurable fields of Hilbert spaces on some measure space, which is called  (intended to resemble, but not exactly be the same as,

(intended to resemble, but not exactly be the same as,  , the space of “functors into

, the space of “functors into  from the space

from the space  , the way Kapranov-Voevodsky 2-vector spaces can be described as

, the way Kapranov-Voevodsky 2-vector spaces can be described as  ). The first work on this was by Crane and Sheppeard, and also Yetter. One point is that for 2-groups, we have not only representations and intertwiners between them, but 2-intertwiners between these. One can describe these geometrically – part of which is a choice of that measure space

). The first work on this was by Crane and Sheppeard, and also Yetter. One point is that for 2-groups, we have not only representations and intertwiners between them, but 2-intertwiners between these. One can describe these geometrically – part of which is a choice of that measure space  .

.

This done, we can say that a representation of a 2-group is a 2-functor  , where

, where  is seen as a one-object 2-category. Thinking about this geometrically, if we concretely describe

is seen as a one-object 2-category. Thinking about this geometrically, if we concretely describe  by the crossed module

by the crossed module  , defines an action of

, defines an action of  on

on  , and a map

, and a map  into the character group, which thereby becomes a

into the character group, which thereby becomes a  -equivariant bundle. One consequence of this description is that it becomes possible to distinguish not only irreducible representations (bundles over a single orbit) and indecomposible ones (where the fibres are particularly simple homogeneous spaces), but an intermediate notion called “irretractible” (though it’s not clear how much this provides). An intertwining operator between reps over

-equivariant bundle. One consequence of this description is that it becomes possible to distinguish not only irreducible representations (bundles over a single orbit) and indecomposible ones (where the fibres are particularly simple homogeneous spaces), but an intermediate notion called “irretractible” (though it’s not clear how much this provides). An intertwining operator between reps over  and

and  can be described in terms of a bundle of Hilbert spaces – which is itself defined over the pullback of

can be described in terms of a bundle of Hilbert spaces – which is itself defined over the pullback of  and

and  seen as

seen as  -bundles over

-bundles over  . A 2-intertwiner is a fibre-wise map between two such things. This geometric picture specializes in various ways for particular examples of 2-groups. A physically interesting one, which Crane and Sheppeard, and expanded on in that paper of [BBFW] up above, deals with the Poincaré 2-group, and where irreducible representations live over mass-shells in Minkowski space (or rather, the dual of

. A 2-intertwiner is a fibre-wise map between two such things. This geometric picture specializes in various ways for particular examples of 2-groups. A physically interesting one, which Crane and Sheppeard, and expanded on in that paper of [BBFW] up above, deals with the Poincaré 2-group, and where irreducible representations live over mass-shells in Minkowski space (or rather, the dual of  ).

).